About

I am a fourth year PhD student at the University of Texas at Austin under the supervision of professor Alan Bovik. Before that, I graduated from the National Technical University of Athens with a major in signals, systems and robotics. My main interests are image and video processing, computer vision and machine learning.

Lately, I have been focusing on studying temporal subjective Quality of Experience (QoE) in adaptive streaming scenarios, and developing objective QoE prediction models.

On my free time, I enjoy listening to music, playing basketball, reading and writing poetry.

Research

SpEED-QA: Spatial Efficient Entropic Differencing for Image and Video Quality

Many image and video quality assessment (I/VQA) models rely on data transformations of image/video frames, which increases their programming and computational complexity. By comparison, some of the most popular I/VQA models deploy simple spatial bandpass operations at a couple of scales, making them attractive for efficient implementation. Here we design reduced reference image and video quality models of this type that are derived from the high-performance RRED I/VQA models. A new family of I/VQA models, which we call the Spatial Efficient Entropic Differencing for Quality Assessment (SpEED-QA) model, relies on local spatial operations on image frames and frame differences to compute perceptually relevant image/video quality features in an efficient way.

PDF codeRecurrent and Dynamic Networks that Predict Streaming Video Quality of Experience

We propose a variety of recurrent dynamic networks that conduct continuous-time subjective QoE prediction. By formulating the problem as one of time series forecasting, we train a variety of recurrent neural networks and non-linear autoregressive models to predict QoE using several recently developed subjective QoE databases. These models combine multiple, diverse network inputs such as predicted video quality scores, rebuffering measurements, and data related to memory and its effects on human behavioral responses, using them to learn to predict QoE on video streams impaired by both compression artifacts and rebuffering events. Instead of finding a single time series prediction model, we propose and evaluate ways of aggregating different models into a forecasting ensemble that delivers improved results with reduced forecasting variance. We also deploy appropriate new evaluation metrics for comparing time series predictions in streaming applications. Our experimental results demonstrate improved prediction performance that approaches human performance.

PDFLearning to Predict Streaming Video QoE: Distortions, Rebuffering and Memory

Mobile streaming video data accounts for a large and increasing percentage of wireless network traffic. The available bandwidths of modern wireless networks are often unstable, leading to difficulties in delivering smooth, high-quality video. Streaming service providers such as Netflix and YouTube attempt to adapt their systems to adjust in response to these bandwidth limitations by changing the video bitrate or, failing that, allowing playback interruptions (rebuffering). Being able to predict end users’ quality of experience (QoE) resulting from these adjustments could lead to perceptually-driven network resource allocation strategies that would deliver streaming content of higher quality to clients, while being cost effective for providers. Existing objective QoE models only consider the effects on user QoE of video quality changes or playback interruptions. For streaming applications, adaptive network strategies may involve a combination of dynamic bitrate allocation along with playback interruptions when the available bandwidth reaches a very low value. Towards more effectively predicting user QoE, we have developed a QoE prediction model called Video Assessment of TemporaL Artifacts and Stalls (Video ATLAS), which is a learning based approach that combines a number of QoE-related features, including perceptually-releveant quality features, rebuffering-aware features and memory-driven features to make QoE predictions. We evaluated Video ATLAS on the recently designed LIVE-Netflix Video QoE Database which consists of practical playout patterns, where the videos are afflicted by both quality changes and rebuffering events, and found that it provides improved performance over state-of-the-art video quality metrics while generalizing well on different datasets. The proposed algorithm is made publicly available here.

Continuous Prediction of Streaming Video QoE using Dynamic Networks

Streaming video data accounts for a large portion of mobile network traffic. Given the throughput and buffer limitations that currently affect mobile streaming, compression artifacts and rebuffering events commonly occur. Being able to predict the effects of these impairments on perceived video Quality of Experience (QoE) could lead to improved resource allocation strategies enabling the delivery of higher quality video. Towards this goal, we propose a first of a kind continuous Quality of Experience prediction engine. Prediction is based on a non-linear autoregressive model with exogenous outputs. Our QoE prediction model is driven by three QoE-aware inputs: an objective measure of perceptual video quality, rebuffering-aware information and a QoE memory descriptor that accounts for recency. We evaluate our method on a recent QoE dataset containing continuous time subjective scores.

PDF WebpageStudy of Temporal Effects on Subjective Video Quality of Experience

HTTP adaptive streaming is being increasingly deployed by network content providers such as Netflix and YouTube. By dividing video content into data chunks encoded at different bitrates, a client is able to request the appropriate bitrate for the segment to be played next based on the estimated network conditions. However, this can introduce a number of impairments, including compression artifacts and rebuffering events which can severely impact an end-user’s quality of experience (QoE). We have recently created a new video quality database which simulates a typical video streaming application, using long video sequences and interesting Netflix content. Going beyond previous efforts, the new database contains highly diverse and contemporary content, and it includes the subjective opinions of a sizable number of human subjects regarding the effects on QoE of both rebuffering and compression distortions. We observed that rebuffering is always obvious and unpleasant to subjects while bitrate changes may be less obvious due to content-related dependencies. Transient bitrate drops were preferable over rebuffering only on low complexity video content, while consistently low bitrates were poorly tolerated. We evaluated different objective video quality assessment algorithms on our database and found that objective video quality models are unreliable for QoE prediction on videos suffering from both rebuffering events and bitrate changes. This implies the need for more general QoE models that take into account objective quality models, rebuffering-aware information, and memory. The publicly available video content as well as metadata for all of the videos in the new database can be found here.

This work is accepted for publication in Transactions on Image Processing.

Sampled Efficient Full-Reference Image Quality Assessment Models

Existing full-reference image quality assessment models first compute a full image quality-predictive feature map followed by a spatial pooling scheme, thereby producing a single quality score. Here we study spatial sampling strategies that can be used to more efficiently compute reliable picture quality scores. We develop a random sampling scheme on single scale full-reference image quality assessment models. Based on a thorough analysis of how this random sampling strategy affects the correlations of the resulting pooled scores against human subjective quality judgements, a highly efficient grid sampling scheme is proposed which replaces the ubiquitous convolution operations with local block-based multiplications. Experiments on four different databases show that this block-based sampling strategy can yield results similar to methods that use a complete image feature map, even when the number of feature samples is reduced by 90%.

PaperGraph-Driven Diffusion and Random Walk Schemes for Image Segmentation

We propose graph-driven approaches to image segmentation by developing diffusion processes defined on arbitrary graphs. We formulate a solution to the image segmentation problem modeled as the result of infectious wavefronts propagating on an image-driven graph where pixels correspond to nodes of an arbitrary graph.

If you use this code in your work, please consider citing the following work:

C. G. Bampis, P. Maragos and A. C. Bovik, “Graph-Driven Diffusion and Random Walk Schemes for Image Segmentation,” in IEEE Transactions on Image Processing, vol. 26, no. 1, pp. 35-50, Jan. 2017

Paper codeProjective Non-Negative Matrix Factorization for Unsupervised Graph Clustering

Visual data (images, video, point clouds) are usually represented by regular graphs (e.g. image grids). However, this regular structure does not capture the intrinsic data structure. Here, we propose an unsupervised graph clustering approach based on matrix factorisation. By factorizing the image feature matrix and regularising the solution based on an image-driven graph, we are able to perform graph clustering and image segmentation altogether. The versatility of this approach is highlighted by its ability to be applied on other arbitrary graph structures, such as point clouds captured by Kinect.

If you use this code in your work, please consider citing the following work:

C. G. Bampis, P. Maragos and A. C. Bovik, “Projective non-negative matrix factorization for unsupervised graph clustering,” in Proc. IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 2016,

Paper code Supplementary DemoSingle-Hand Motion Gesture Recognition using machine learning and image processing techniques

In this project we worked on developing a Single-Hand Motion Gesture Recognition system from scratch. We used a set of machine learning and image processing methods which you can see in the project’s report. This work received the Digital Video Class 2015 Best Project award.

Class PaperGraph Clustering using Random Walker

The aim of this work was to explore an interesting connection between the SIR model and the Random Walker algorithm in image segmentation. Then, one can improve the Random Walker by extending and applying it in an arbitrary graph structure to get better results and reduce the problem’s dimensionality.

This work was presented at ICIP15, Quebec.

Class Paper codePersonal

Here is a list of my favorite things:

- favorite food: anything spaghetti related

- favorite movie: Star Wars (old trilogy only!)

- favorite city: Athens, Greece

- favorite hobby: reading and writing

- favorite writer: C. Baudelaire

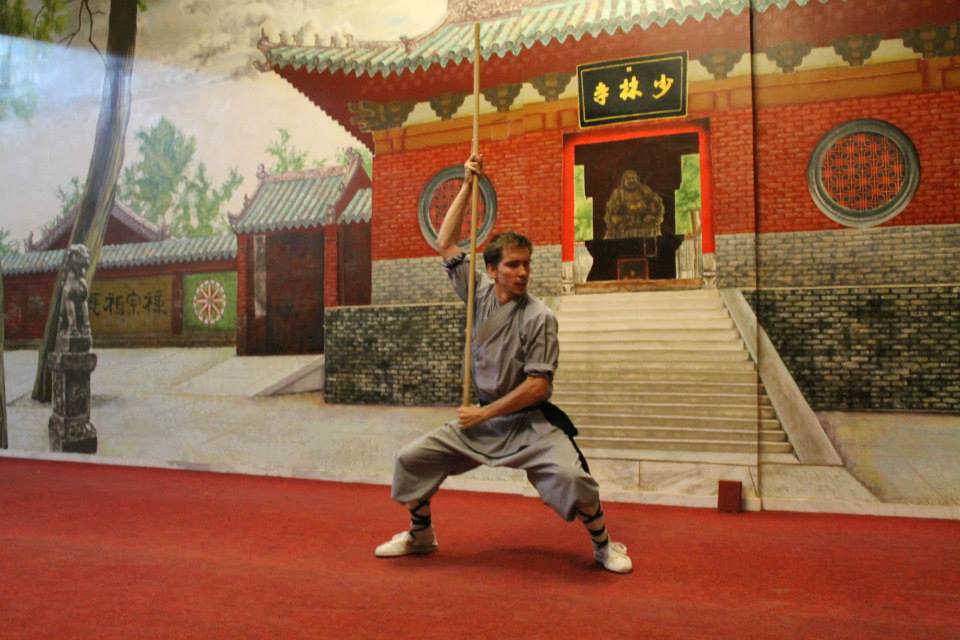

- I used to practice Shaolin Kung-fu, here is a picture back from these amazing days.